Generated by Nano Banana Pro with prompt “Wide, realistic photograph from the top of a ski mountain showing two diverging runs: one steep, straight black-diamond slope dropping sharply downward, and one gentler blue run curving off to the right. No people visible. Natural winter light, editorial photography style, restrained color grading”

Claude vs Codex: Practical Guidance From Daily Use

Generation vs analysis, and why the difference matters

I have been using AI coding agents heavily to amplify the output of our two-person product team at Outlyne. My co-founder, Jeremy Willer, is a brilliant product designer and highly capable traditional web developer (HTML and CSS), while I’m a full-stack software engineer. Over the last decade, React and JSX (now TSX) have enabled Jeremy to become a productive contributor to our Typescript codebase. Then when AI coding agents became available and usable in Zed, our preferred editor, it was like we suddenly had an extremely productive junior engineer available around the clock to take on any coding task that previously would’ve fallen to me.

You’re almost certainly familiar with this story. A week later, I was staring at a backlog of PRs to review totalling more than 20K changed lines of code, and the overall code quality of the PRs was quite low. Claude Sonnet 3.7 was absolutely unrivaled at generating “working” code, and absolutely incapable of structuring that code to be maintainable and resilient. It was like working with a genius-level golden retriever on acid — boundless enthusiasm, impressive output, zero architectural judgment.

My productivity ground to a halt as I spent the next two weeks just reviewing and fixing PRs, miserable and heading fast towards burnout. Bugs made it into production and ultimately cost me weeks of debugging. We quickly realized that we could use the agents to put together feature prototypes and try them out and gather feedback, but that we absolutely couldn’t use them to blindly generate PRs intended for deployment. “Blindly” is doing real work there: my fundamental rule is that all code must be seen (reviewed) by a human who understands what it’s doing. And we also realized that working through a plan or spec before starting a task was essential.

Claude Sonnet 4.5

The agents have come a long way since then. Claude Sonnet 4.5 (and Opus 4.5 when needs demand it) is still unrivaled at code generation, and its instincts around maintainability have improved. We’ve also substantially tightened our AGENTS.md rules (symlinked as CLAUDE.md) to steer it towards our best practices and away from common mistakes, making the output easier to review and merge.

For example, we added a rule to address Claude’s tendency to make props optional by default. When adding a feature that requires new data, the easiest path is newProp?: string, because it compiles everywhere immediately. This creates silent failures, either immediately or sometime in the future: components render and TypeScript is happy, but features don’t work because the prop isn’t being passed. We now instruct the agents to make props required unless they have a meaningful default or the component genuinely handles the undefined case:

- **Default to required props; only make props optional when truly necessary**

- Props should be required by default unless they have a meaningful default value or the component genuinely functions without them

- Avoid making props optional just for theoretical flexibility—this leads to silent failures where UI controls don’t work but TypeScript doesn’t catch missing props

- If a component needs a prop to function correctly (even if it can render without throwing an error), make that prop required

- Only use optional props when: the component has a sensible default behavior, the prop is genuinely optional for the use case, or the component explicitly handles the undefined state in a meaningful way

- Example: `onClick` handlers should typically be required if the component is meant to be interactive

We use Claude heavily to plan out features and rearchitectures and migrations, storing them as markdown files in a root plans/ folder in our repo. We also use it for implementing distinct tasks in those plans, or for simple one-off coding tasks we can describe with enough detail directly in the prompt, and while I rarely if ever have been able to one-shot anything of any significant complexity with a result to my satisfaction, Claude can usually get 90% of the way there.

Where it consistently fails, however, is code review. It rarely finds any important issues and more often than not ends with baseless claims on how “production-ready” the code is, while the issues it does raise are generally some particularly toxic combination of marginal in scope and misguided.

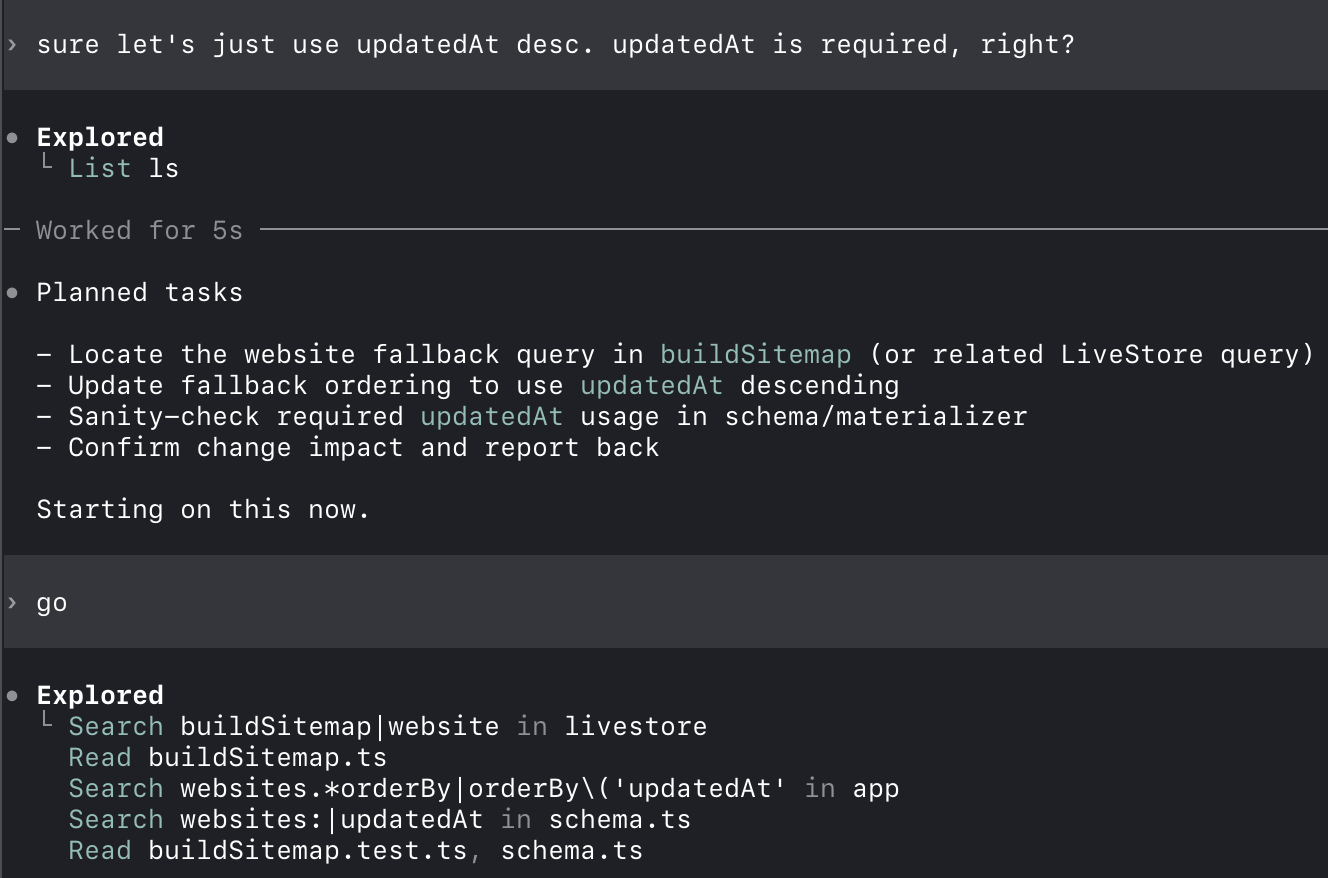

OpenAI Codex

A couple of months ago, I was persuaded by Peter Steinberger’s “Just Talk To It” blog post to try out the codex CLI. Coming from claude, I was genuinely perplexed by Peter’s enthusiasm. I frequently found myself asking codex to do something clear and straightforward, checking in on something else in the meantime, then coming back to find that it had thought through a plan, listed a few bullet points, said it was going to start… and then stopped. I kept having to encourage it onward. I tried queueing up “commit and continue” commands like Peter recommends in that post, but I can’t get codex to git commit successfully. See this exchange, where codex tells me “Starting on this now”, then stops until I prompt it with “go”. Truly confounding.

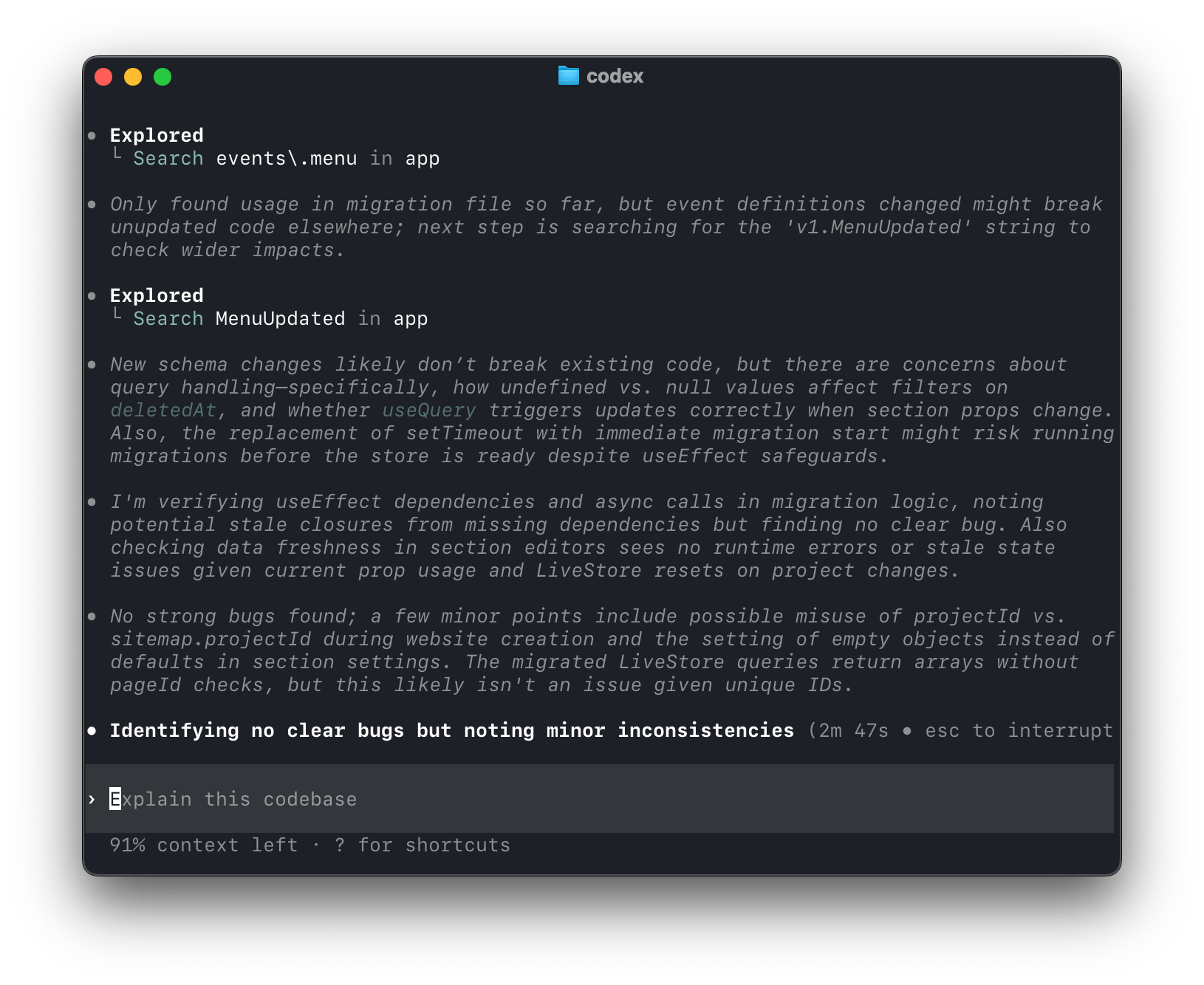

All that said, I have very much come to appreciate codex (mostly using gpt-5.1-codex-max). It has never told me that a PR is “production-ready”, nor does it constantly blow smoke up my ass. It’s much more reserved with praise and enthusiasm. It just feels like a more serious coding partner. I don’t use it as often for pure coding tasks, because I still find there’s more friction in the process. However, it occurred to me that the more serious nature of codex could lend itself to more effective code review. I then discovered that codex has a /review slash command that lets you review the current branch “PR-style” (against the base branch of your choosing), uncommitted changes, or a specific commit.

So I tried it out and holy crap. It’s sooooo much better than Claude or Gemini. Watching it go through the code changes is a trip. It will say I’m reviewing SectionContentEditor and related hooks for handling undefined data safely, and considering stale data risks from useEffect dependencies and useEffectEvent usage. I’m also checking event schema changes, especially optional fields affecting migrations, and assessing whether materializers and tests properly incorporate new event fields like websiteId. And then I will say thank god someone is paying attention.

Here’s an excerpt from a review of an auth flow refactor:

Key Findings

- Login/register routes now immediately redirect to

${LOGGED_IN_HOME}?auth=loginand${SIGNUP_REDIRECT}?auth=registerwith no loader/action/auth form (packages/main/app/routes/_auth.login.tsxand_auth.register.tsx). This removes the UI for logging in or signing up and assumes the destination route is publicly reachable; ifLOGGED_IN_HOMEis still auth-protected, users will hit an infinite redirect loop. All flows/tests expecting the actual forms will break.SignUpInterruptpopover is no longer rendered in the project page (packages/main/app/routes/projects.$projectId.tsx), but the e2e suite still expects it and the popover id remains referenced in tests. This regresses the in-project auth modal and will fail the Playwright specs around avatar menu auth(tests/e2e/avatar-menu-auth.spec.ts).

This is a superpower. It’s become an essential part of my workflow and I use it as both the first and last reviewer on any changeset. I also assign GitHub Copilot as a reviewer on every substantial PR. It typically makes 5+ suggestions per PR with 1-2 useful ones. Two recent examples:

- Non-unique fallback

idattributes for a checkbox component - Validation state interactions where clearing one thing could inadvertently clear errors from unrelated validation logic

That’s enough signal to justify the tedious process of dismissing invalid suggestions. But Copilot’s suggestions are always localized to the change and its immediate surroundings. It’s never caught an architectural issue like the auth redirect loop above. Those require the kind of system-level reasoning that only codex demonstrates.

That’s how I’m using AI coding agents today. It will almost certainly change in a few months. I’m already suspicious—though not yet confident—that gpt-5.2-codex is a less thorough reviewer than gpt-5.1-codex-max based on how quickly it completes reviews and the scenarios it seems to consider.

What I’m more confident about is this: different agents excel in different cognitive modes. Some are exceptionally good at generating code, while others are far better at analyzing it. Matching the tool to the cognitive mode matters more than any individual model upgrade.